Data is becoming increasingly important for understanding markets and customer behaviors, optimizing operations, deriving foresights, and gaining a competitive advantage. Over the last decade, the explosion of structured and unstructured data as well as digital technologies in general, has enabled new ways for customers to interact and new business models in the financial services industry.

Transformational Shifts in the Financial Services Industry and Increased Data Woes

The financial sector is probably one of the areas to be most affected by digital transformation. We often hear of new fintechs popping up and building envious market value. In fact, the combined value of the six largest banks in the U.S. is now smaller than the combined value of four big fintech companies. The reason for this difference lies in the efficiency with which fintechs operate and the tendency of the investors to reward that efficiency. While many factors are responsible for how efficiently a financial institution operates, one of them is surely the ability to leverage modern data technologies to build insights and real-time decision-making. While the incumbent financial institutions with vast, segmented data landscapes, built around legacy systems, are failing to build holistic, real-time data delivery infrastructure, the fintechs with modern technology stacks have been able to capitalize on these market trends and penetrate the customer relationship market of the financial sector, which captures 70% of the value.

The slow loss of this valuable arm to these new competitors, while taking hits in the form of lower interest rates, rising regulatory and compliance costs (almost 4X higher since the 2008 financial crisis), and soaring operating costs (technology assets and retail banking shops), has made many incumbent financial institutions vulnerable to high-cost traps. On top of these developments, new open banking regulations like PSD2 (making third-party access to customer accounts obligatory through open APIs) further threaten to change the financial value chain (including loans) and business models. However, one may argue that PSD2 also gives the FSIs an opportunity to monetize their data assets, given they employ the right of practices and technologies to achieve that.

Understanding Data Costs at Financial Institutions

In order for financial CIOs and data managers to learn how to create better value for their organizations using data technologies, they must first understand how they are investing in data and data-related efforts, as well as how a continuous increase in these costs adversely impacts the bottom line.

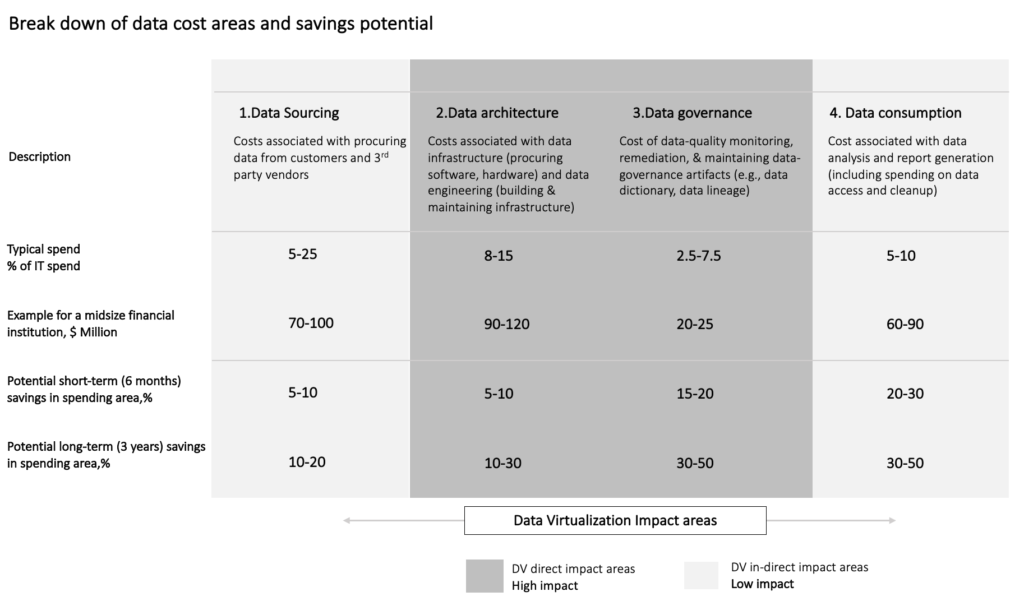

A recent McKinsey study shows that a typical midsize financial institution with $5 billion of operating costs spends more than $250 million on data across four areas: sourcing, architecture, governance, and consumption. Almost half of this cost is shared by data architecture and data governance. The study further digs into the cost optimization potential (listed below in Figure 1) in these areas through improving the productivity of data engineers, simplifying the data architecture, streamlining data controls and standards, and refining the scope of governance and security around data.

Figure 1: A breakdown of data cost areas and savings potential

Image source: Denodo Technologies GmbH, Data source: McKinsey & Company

Creating Tangible Business Value in the Financial Services Industry with Data Virtualization

Data virtualization, a way of integrating disparate data sources, is a relatively new concept and technology compared to traditional business intelligence (BI) and data warehousing technologies. It involves creating a logical data access layer that aggregates data from disparate data sources to create a single unified cross-functional view of data for consumption. It doesn’t store data, nor does it replicate data. It only creates a logical abstraction layer on the top of these disparate data sources while data remains in the respective source systems.

Data architecture is one area in which data virtualization can make a substantial productivity difference and create tangible business value for the organization. Traditional BI & analytical approaches require building data warehouses and data marts, an approach that is not only labor-intensive but also expensive from an ETL engineering point of view as it relies on the replication of data sources, unnecessarily multiplying storage systems, which requires their own maintenance and security provisions. This only adds to the soaring data storage and maintenance costs that are already eating up between 15 and 20 percent of the average IT budget.

Data virtualization can also make a strong impact in the secondary yet critical role of enabling application programming interfaces (APIs). Banks are already building a large number of APIs, in part triggered by the PSD2 regulation (Approximately 43% of all APIs have been built to meet PSD2 requirements). The incumbent financial institutions expose data through these APIs to various organizations including other fintechs, governments, insurers, customers, and partners. While API management platforms play a central role in creating and publishing APIs, data virtualization can greatly enhance the capabilities of an API platform. Data virtualization, with its logical data abstraction layer, can act as a buffer data platform for API transactions outside of the core data systems. The buffer platform abstracts source systems from outside interactions, greatly enhancing API security and performance. Other data virtualization capabilities, such as data catalogs and data lineage features, significantly improve business user productivity and can apprise the compliance staff of all relevant information, so they can more readily discover any untoward incident.

How a Major Investment Bank Leveraged Data Virtualization to Reduce Costs and Create Better Customer Experiences

A multinational investment bank partnered with a major global fintech company in 2018 to roll out a digital wealth management platform. The bank built a logical data layer that enables data to flow back and forth between the two organizations, providing a single source of truth to business users while enabling scalability and efficiency. The platform helps thousands of advisers and staff of the bank with front- and back-office tasks, including account opening and trade routing, creating a richer client experience, and digitizing enterprise-wide operations. The new wealth management platform will also include a modernized advisor desktop interface with a customizable toolset. This transformation has enabled the bank to onboard customers at a quicker pace and offer product and service advice faster, which immensely contributes to a positive customer experience and to improved rates of retention. Besides, the bank has substantially reduced costs by retiring around 300 TB of unnecessary data storage along with 170 applications.

Digital Virtualization for Financial Services

How successfully and efficiently a financial institution is able to exploit its data assets will determine how well it operates its business and survives changing market conditions. The major incumbent financial institutions are embarking on digital transformation journeys to discover new avenues of growth while staying compliant in an increasingly demanding regulatory environment. The investment bank mentioned earlier is just one example of such ambitious financial institutions that have leveraged data virtualization to consolidate and modernize their data infrastructures to meet scalable, secure, and real-time data delivery demands to enhance the customer experience without interrupting existing business operations.

To learn more about data virtualization and how it can help your organization in easing data woes, keeping a low storage footprint, and building agility and scalability into the data architecture visit the Denodo Financial Services pages.

- Laying a Modern Data Foundation to Fight Financial Crimes - September 2, 2022

- The Secret Sauce of LeasePlan’s Award-Winning Logical Data Fabric - August 4, 2022

- Tackling Cloud Data Integration Woes with the Denodo Platform - February 3, 2022