Organizations of all sizes and types are harnessing large language models (LLMs) and foundation models (FMs) to build generative artificial intelligence (GenAI) applications that deliver enhanced customer and employee experiences. But to do so, AI application builders or citizen data scientists must extract data from a variety of siloed repositories and piece them together before the data can be used by LLMs. This is challenging, as it requires builders to first identify where the data is stored, how to “speak” to it, whether in a dialect of SQL or another language, which format it is in, and how to access it. In many cases, since the data is often scattered throughout the enterprise, it lacks context.

Next, builders need to combine and preprocess the data (clean, tokenize, and normalize it) so that they can customize their models using a variety of techniques that include fine-tuning, retrieval augmented generation (RAG), and prompt-engineering. These two challenges (integrating and preprocessing) are continuous through the lifecycle of GenAI application development, because LLMs can’t operate on “point-in-time” data.

These builders need a way to make more corporate data usable for training and customizing LLMs, providing a context window regardless of where the data lives. To overcome such challenges, Amazon Bedrock’s strong library of LLMs is now integrated and supported by the Denodo Platform, unlocking the full potential of GenAI and enabling organizations to access vast data sources and feed them to their GenAI applications for personalized, relevant insights.

Leveraging the Power of Amazon Bedrock

The Denodo Platform’s integration with Amazon Bedrock provides a GenAI data foundation that helps AI applications such as customer-facing chatbots to access data from a variety of enterprise systems so they can deliver accurate, appropriate responses to customer prompts. It enriches the Amazon Bedrock LLMs with access to trusted, clear, curated, and usable data for better decision making and generating powerful insights. For product builders, it accelerates data product and application development through the use of a single trusted data abstraction layer, helping builders to make sense of the available data and know which data is best to use to get the results they need.

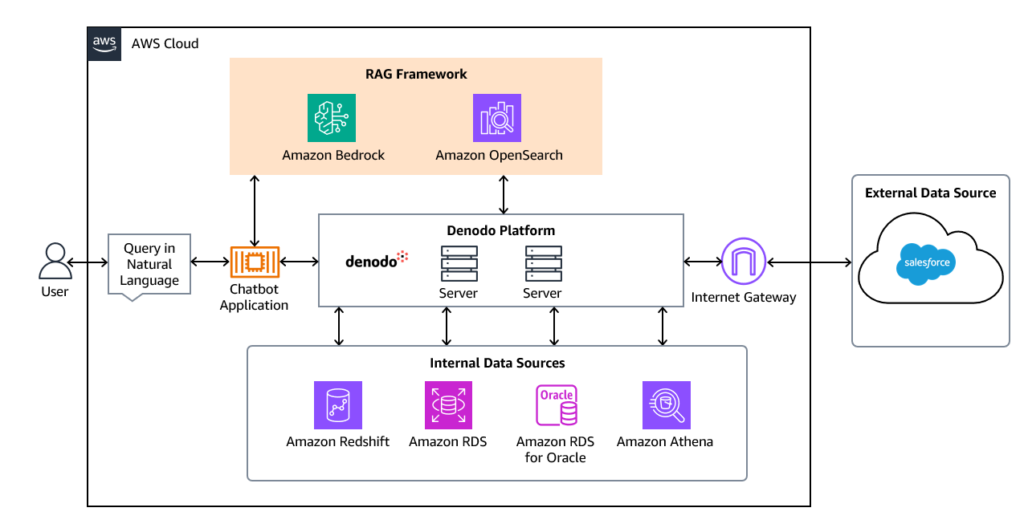

Techniques like RAG help bridge the gap between enterprise GenAI apps and the enterprise data that can support them. Although RAG is a great tool, and LLMs have enabled natural-language-to-SQL translation, both capabilities fall short when enterprise data is scattered across a complex, heterogeneous data landscape. In the reference architecture below, you can see how the Denodo Platform feeds the RAG framework, leveraging Amazon OpenSearch as the vector database to drive the chatbot application with the enterprise-wide data across Amazon S3, Amazon RDS, and more. Unified security and passthrough also enables all data in the organization to be accessed by RAG queries, but data can only be exposed to the specific user asking the question.

In the example above, a mortgage processing company integrated the Denodo Platform with RAG models on Amazon Bedrock to empower loan officers with GenAI and LLMs to efficiently handle complex queries and tasks. The Denodo Platform’s data virtualization layer unifies fragmented data sources like the EDW, CRM systems, loan origination software, and compliance documents, ensuring the RAG-enabled model has access to comprehensive, up-to-date information. When a loan officer submits a natural language query, the retrieval component fetches relevant data from virtualized sources, such as eligibility criteria or regulatory guidelines, which the language model then processes to generate detailed, contextual responses tailored to the customer’s needs. This approach streamlines intricate processes like pre-qualifying customers, preparing loan packages, and addressing underwriter requests, enabling loan officers to provide accurate, compliant, and personalized service while leveraging the power of GenAI.

The Denodo Platform and Amazon Bedrock: An Intelligent Approach

In summary, these AI-based capabilities can have a strong impact in multiple kinds of users, from business users, to developers, to administrators, and data stewards. It drives improved usability by end users, through natural language queries, increased productivity in development, with an AI-based copilot that helps during the development of views, and simpler administration and stewardship of the system. The Denodo Platform plays a fundamental role in the GenAI domain by seamlessly integrating with the LLM technology ecosystem. This integration empowers chatbots to gain unified, secure access to an organization’s data sources, enabling them to leverage structured data for the creation of precise responses to information requests.

Learn more about the Denodo Platform for AWS and accelerating data integration and access, no matter where your data resides.

- Agora, the Denodo Cloud Service – Is Now Available on the AWS Marketplace - December 4, 2024

- Unleash the Power of Generative AI and Denodo Platform to Deliver a Differentiated 10-K Performance with Clinical Trial Data - July 31, 2024

- Leveraging the Denodo Platform with Amazon Bedrock large language models (LLMs) to Build Intelligent Chatbots Using Enterprise Data - July 24, 2024