The field of artificial intelligence (AI) is more exciting than ever. The climate is full of creativity and innovation, and we’re learning that large language models (LLMs) have capabilities we can exploit in many ways. In previous posts, I spoke about leveraging them to securely ask questions and perform semantic searching across the enterprise, and these capabilities will no doubt change the way we interact with information. In this post, I’ll talk about how LLMs can enable software agents that can autonomously take advantage of the capabilities I mentioned in my first post in this series. So, let’s now delve into this topic of intelligent autonomous agents (IAAs).

What are Intelligent Autonomous Agents?

Intelligent autonomous agents (IAAs) are systems that can understand their environment, analyze information, make decisions, and act independently to achieve specific goals. They can operate without constant human supervision to streamline processes and improve efficiency across a variety of different applications.

This might sound a bit daunting and evoke images from movies like “The Matrix,” in which intelligent agents and machines have taken over the world. In contrast, the IAAs in development today are designed to assist and enhance human capabilities, not to control or oppress us. We’ve seen agents in action for a while now in the form of smart thermostats, lighting systems, AI chatbots, automated production lines, and robotic vacuum cleaners. More recent advancements include self-driving cars and delivery drones.

Historically, these technologies have operated within controlled software frameworks, following specific models, rules, tasks, and behaviors. Today, however, they are becoming more sophisticated and can now function autonomously in more complex, dynamic environments. They have the potential to transform industries and realize previously unimaginable scenarios.

Intelligent Autonomous Agents in the Enterprise

For organizations today, IAAs can bring significant value in the form of enhanced efficiency, convenience, and capabilities, as they can automate repetitive, time-consuming tasks, boosting productivity and freeing people up to do more complex, creative work. These agents are tireless, they work around the clock, and they can perform a large volume of activities because they can scale; they can increase activity levels and take on additional tasks as needed without compromising performance.

When we expose information to such agents, they can effectively assess their environment and respond appropriately. This ability to analyze information and react in real time to changing conditions enables IAAs to optimize processes, reduce errors, and swiftly adapt to new challenges, enhancing the overall responsiveness and agility of the business.

What Role Can IAAs Play in Expanding the Powers of LLMs

We learned earlier that LLMs can write SQL, but they can also assess API specifications and not only decide if it needs to call an API, but take information from an interaction and use it to structure the information needed to call an API. The LLM cannot call the function, but it knows enough to set up the call. You are still in control of the actual execution.

The integration of IAAs into the LLM workflow represents a significant leap forward as IAAs are fully capable of executing any necessary functions. Beyond that, they provide a generalized means of incorporating autonomous decision making. When you use LLMs to illicit a decision, with an IAA onboard, you do not require that much structure. You can provide information and instructions in natural language instead of having to leverage code. LLMs can evaluate vast amounts of natural language data, which enhances their ability to interact with human users and other AI systems more effectively. For example, IAAs can now delegate tasks to other specialized systems through APIs, manage complex datasets, and respond dynamically to changes in their operating environment. Given that IAAs can also delegate to other IAAs, you can start to see how powerful this can be.

AI expert Andrew Ng recently spoke about how we can use LLMs to implement agent-based workflows that can leverage a myriad of services and functions in an enterprise as well as other agents, in this enlightening 15-minute video. It encapsulates the growing trend towards systems that are not only reactive but also proactive, capable of autonomously managing more of the world’s complexities. This suggests a future in which IAAs could manage aspects of daily life and business operations with minimal human oversight, paving the way for innovations that could transform not only organizations but society at large.

To trigger IAAs to act, developers do not need to write any logic—LLMs are fully capable of making that decision. The process can be super sophisticated, as well. When you get an sense of the multitude of prompt techniques that are available, such as the thirty (and growing) that are published at that link, you can get a better appreciation of what IAAs can achieve. These application areas range from understanding user intent and reducing hallucinations to reasoning, logic, self-reflection, and managing emotions and tone. Now you should start to see where leveraging many of these areas and incorporating them together can be very effective.

Data Fabric and IAAs

Data fabric is the backbone of a company’s data system. It helps us ask questions and find information across the entire organization. But it can do more than just provide information—it can also collect data and perform tasks.

Data fabric provides consistent interaction with our systems and services. It provides a dynamic structure for our assets, secure access to information, and easy integration with a variety of tools.

The real power of data fabric is its ability to provide consistent semantics and metadata across the enterprise. This is crucial for integrating services with LLMs. Data fabric dynamically structures enterprise assets and secures access to information through direct queries and web-based services. These features extend seamlessly to IAAs.

If you’re familiar with Denodo, you might have heard about the capabilities of data fabric. But here’s something you might not know: Within a Denodo-Platform-enabled data fabric, you can create services on database tables, stored procedures, remote functions (such as those in SAP),and even other services. For example, you can transform SAP remote function calls (RFCs) into REST services, enabling both read and write operations. Similarly, you can create services on database tables that support these operations.

Denodo data fabric ensures that all these services come with comprehensive governance, security, and rich semantic metadata. The metadata is vital for simplifying the sharing of web services with an LLM. By using Denodo data fabric, you establish a consistent framework that provides both democratized data and services to business users.

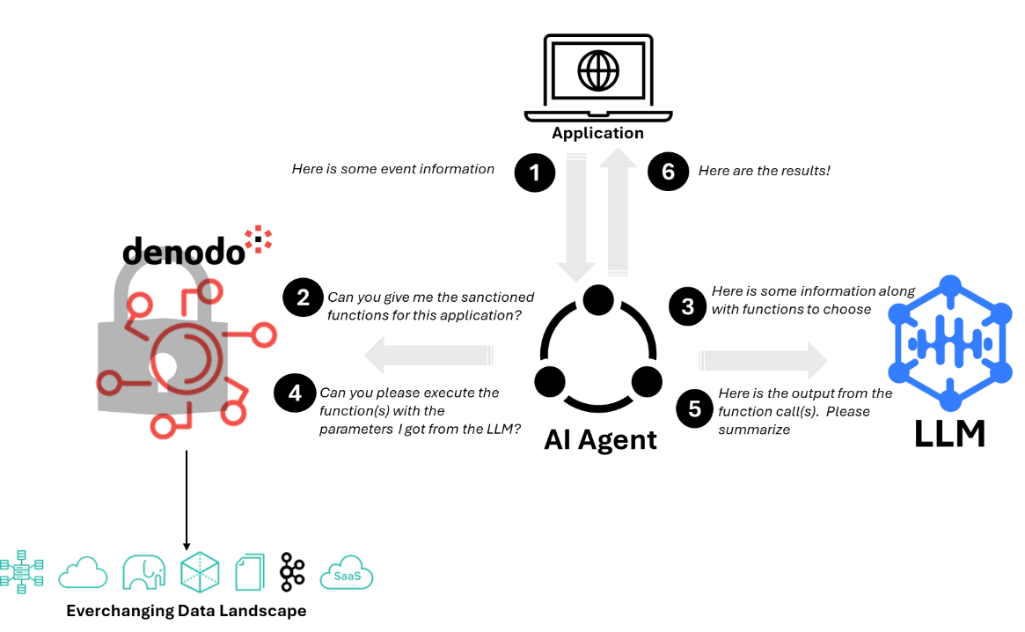

As with natural language queries, the data fabric supplies metadata and specification information for its services. Visualize an AI agent leveraging both an LLM and the data fabric. When an application interacts with the AI agent, it uses the data fabric to access relevant functions associated with the application. The AI agent can then use the function metadata to craft prompts for the LLM. The diagram below depicts this exchange:

During the interaction, the LLM determines if it has enough information or if calling one or more functions is necessary. If a function call is required, the LLM provides the needed details to the AI agent, which executes the function and sends the results back to the LLM for summarization.

These functions can perform various tasks, such as updating systems, retrieving information, or sending triggers. Envision multiple AI agents handling different tasks or workflows. For example, one agent could enable users to ask questions in natural language. These AI agents can also call each other or standard functions, making the system incredibly flexible and efficient.

The integration of semantics and metadata through the Denodo data fabric and AI agents is truly transformative, enabling smarter, more dynamic interactions and workflows. It’s an exciting development that pushes the boundaries of what we can achieve with our data infrastructure.

An Example Scenario

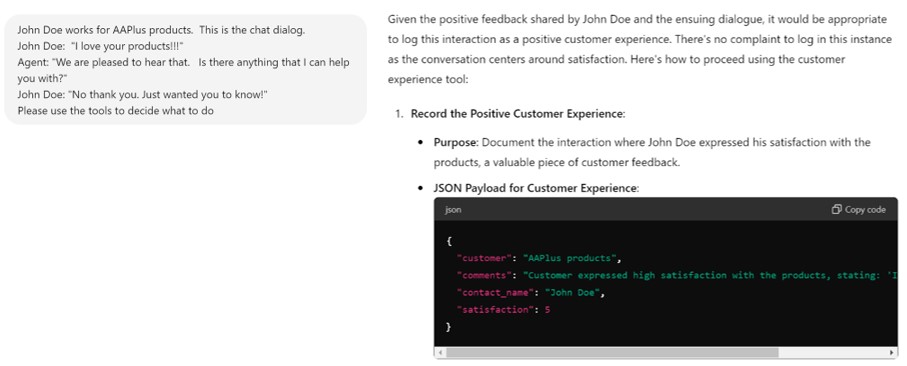

In my previous posts, I described an example application that enabled account representatives to use natural language to query a data fabric directly as well as to index and securely retrieve information. The account representatives are true believers in the power and versatility of LLMs and data fabric. They now want to be able to get more insight about their interactions in the hopes of deploying the application to actual customers. We decided that we would create an AI agent that knows how to assess customer interactions. This AI agent will review customer interactions and determine if there are any issues and log them. This agent will also review the exchange and log a satisfaction score and an explanation of the score. This will allow us to get insight on how effective the application is and provide immediate information about issues people are having.

To support the tasks, we can create two services in the Denodo data fabric: One that logs customer complaints into a database and one that logs the satisfaction rating and explanation. When we add these services to our application database, we associate them with the application. We can now build the agent that can deliver the prompts necessary for the LLM to perform its reasoning. Given the complexity of this topic, I decided to give ChatGPT the metadata for the two services and then provided it with the following sample chat dialogs:

These are pretty simple examples, but they imply that any kind of service could be represented in the data fabric. Imagine if we included services that created an entry in an incident tracking system or triggered an event to start an investigative workflow.

Once we implement this functionality, we can also analyze the issues users are reporting as well as the experience they are having with our chat application and our company. This would provide insight into how our GenAI application is working and what users are experiencing. We can then decide if our semantics are correct or if users would benefit from more information. Note that we did not have to create a survey or application. We simply had to make the information about these services available to the LLM from the data fabric.

Hopefully, this post has gotten your creative juices flowing, and I hope that you can see that there are quite a few ways that we can expand on this. We can have an AI agent categorize the issues, route them, or place event triggers to start workflows. The possibilities are endless.

The Value in Leveraging a Logical Data Fabric

I hope that in this series, I have helped you see that for all of the critical capabilities we needed to leverage from the LLM, we needed access to enterprise data. The Denodo data fabric provides consistent access to the enterprise in any scenario, through an LLM framework. AI capabilities are growing fast, and if you want to take advantage of this, you need to simplify your access to enterprise data. Abstracting from the underlying data sources is the future-proof way to do it, and that is what the Denodo data fabric offers.

The power to affect the semantic representation of data and activities is key to working with AI. My previous posts dealt with collecting and querying information. With the Denodo data fabric you can leverage web services creation capabilities to update databases or use remote calls and APIs to update systems and present them in business terms. AI agents can dynamically access the metadata and specifications via the data fabric to increase the accuracy of information used to call these functions. The Denodo data fabric decouples AI agents from the data and completion of tasks to leverage expertise where it is needed, independent of the implementation. This means:

- The ability to leverage a consistent AI agent framework

- Distributed management of data and database schemas

- Delegated security and compliance

- The ability to extend new applications via new virtual databases

- A consistent approach and consistent processes

- A simplification of the data model

- A separation of concerns and a decoupling of AI agents from data and task completion

- Maintainability and extensibility

By using the Denodo data fabric, we have simplified the integration of function calling. We can virtualize services, secure them, and make them available. Application owners can adjust descriptions and required information, within the data fabric. This enables them to control behavior by specifying it in natural language. This separation of concerns not only simplifies the overall data architecture but also enhances maintainability and extensibility. Because the Denodo data fabric enables seamless data governance across the entire fabric from a single point of control, it also provides organizations with the means to prevent their GenAI applications from operating outside of secure guardrails. Domain experts can manage semantics, security, and which aspects of the enterprise they want to share. AI developers can focus on writing and enhancing frameworks, knowing they have a consistent, secure interface to both data and services. Data managers can determine the best ways to persist and source information. Additionally, the fabric’s ability to dynamically adjust to changes in the data environment helps maintain high performance levels and up-to-date data access, both of which are crucial for maintaining competitive advantage.

Logical data management solutions like the Denodo Platform facilitate the integration, structuring, securing, and utilization of information, accelerating delivery, reuse, and improvements. This enables organizations to empower AI applications that leverage intelligent autonomous agents.

Summing Up the Series

I covered quite a bit in this series. In my first post I introduced the potential for RAG and data fabric to clean up LLMs. Part 2 covered the use of natural language to ask questions of your enterprise data, part 3 covered semantic searching and secure retrieval, and this last post covered intelligent autonomous agents. The Denodo Platform can accelerate all of these capabilities and help you place guardrails to ensure success. I hope that I have sparked some interest and excitement about leveraging a data fabric and Generative AI in your organization. Thanks for reading!

- The Power of “People-First” Data Architecture - December 26, 2025

- The R in RAG - July 30, 2025

- Data Management with the User Experience in Mind - January 8, 2025